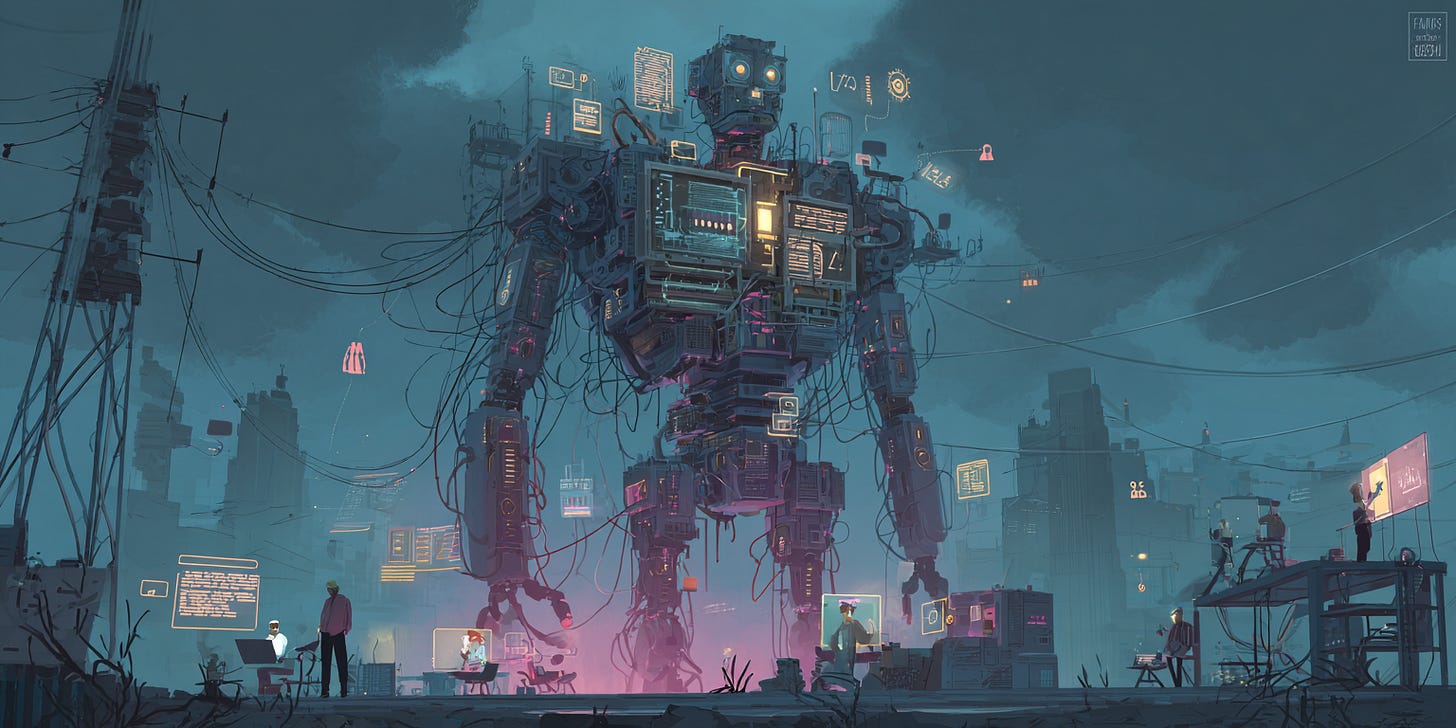

Frankenstein AI

Building it could kill your business

When I talk about Frankenstein AI, I’m not talking about some sci-fi future where AI becomes self-aware and turns on humanity.

I’m talking about something far more realistic—and far more dangerous.

Frankenstein AI is what happens when companies start stitching together a bunch of different LLMs, agents, tools, APIs, and vendors, hoping that all of it magically works as one cohesive system. It looks impressive in demos. It sounds innovative in board meetings. And it feels cheaper than buying big enterprise software.

Until it isn’t.

I use the term Frankenstein AI very intentionally. Not just because it’s stitched together from different parts, but because if you go back to the original Frankenstein story, it wasn’t really a monster story. It was a story about abandonment. Something powerful was created, released into the world, and then left without real ownership, care, or long-term responsibility. The destruction didn’t come from malice—it came from neglect.

That’s exactly the pattern I’m starting to see with AI inside organizations today. The problem isn’t that AI doesn’t work. The problem is how companies are deploying it.

What I’m seeing more and more is organizations layering AI on top of already messy systems. One team builds an agent on one LLM. Another team builds a different tool on a different model. A third vendor brings in their own architecture. Everything gets “glued together” with APIs, and everyone assumes someone else is responsible for making sure the whole thing doesn’t fall apart.

Nobody owns the full system. Nobody owns what happens when it breaks. And when it breaks, it doesn’t break gently. What’s interesting is how it’s happening in companies of different sizes.

Fortune 500 Global Companies

Many large global firms are already struggling to contain what I would call early-stage Frankenstein AI. When you have tens of thousands of employees spread across dozens of countries, you are automatically fighting an uphill battle. How does a CTO sitting in the U.S. realistically prevent a small marketing team in Australia or India from spinning up their own LLM for $20 or $300 a month to automate a task? More importantly, how would they even know it’s happening?

That’s the real challenge. In an “AI cloud” world, it’s incredibly easy to bypass IT entirely. No servers. No installs. No approvals. Just a credit card and a browser.

Now layer on the fact that most global enterprises work with dozens—sometimes hundreds—of development agencies and consulting firms. Each one is incentivized to ship fast. Each one may be building custom agents or AI-powered workflows for a different department. Suddenly the question isn’t just what is being built, but what LLM is it built on, how is it governed, and who owns it once the vendor rolls off?

Everyone is trying to sell AI services to global brands right now. Without strong controls, it’s easy to imagine dozens of disconnected AI systems quietly creeping into production. That’s how you end up with a long-term maintenance nightmare that no one fully understands. I wouldn’t be surprised if, in 2026, we see several large enterprises deliberately slow down AI adoption—not because they don’t believe in AI, but because they need time to contain Frankenstein AI before it spreads further.

The smarter large enterprises have already recognized that uncontrolled AI sprawl is an existential risk. So they’re doing the boring but correct thing: making executive, board-level decisions around a single foundational LLM for the entire organization. Whether it’s Gemini, Copilot, or something similar, the rule is simple—this is the model, this is the environment, and everything else is off-limits.

That kind of mandate isn’t about innovation theater. It’s about containment. It’s about security. It’s about ownership. These companies understand that you cannot have tens of thousands of employees uploading internal documents into random AI tools and just hope that nothing leaks, drifts, or comes back to hurt you later.

Not surprisingly, many global brands are now hiring a Chief AI Officer to lead this effort. This role isn’t just about building new AI-powered capabilities. Just as important, it’s about stopping the unchecked spread of AI inside the organization—setting standards, enforcing compliance, and deciding what not to build.

Early-Stage Companies

Early-stage startups are a very different story.

They’re small. They have limited surface area. They’re usually building narrow tools to solve very specific problems. If something breaks, they tend to know exactly what broke and why. They simply don’t have enough organizational complexity to accidentally create a systemic AI monster.

That doesn’t mean risk is zero. You can still have employees using personal LLM accounts to draft emails or analyze data and, in the process, inadvertently sharing internal documents. But in a startup, this is usually manageable. Clear leadership, a simple AI usage policy, and strong cultural norms around data handling can contain this risk pretty effectively.

Because of that, the likelihood of a true Frankenstein AI nightmare emerging inside an early-stage startup is relatively low. The biggest danger lies in mid-sized companies.

Mid sized businesses - SMBs:

The companies I worry about most are the ones doing a few hundred million dollars a year in revenue. Solid businesses. Decent margins. Valuations in the low billions. IT is important, but it’s not the core business. And budgets, while meaningful, are still constrained.

These companies live in a brutal no-man’s-land.

They’re too big for lightweight, off-the-shelf software. They’re too small to justify massive SAP or Oracle implementations. Spending five million on licenses and fifteen million on consultants just doesn’t make sense for one function of the business. Paying over a million a year in support fees feels insane.

So they compromise.

They buy mid-tier systems that “get the job done” but are painful to use. They slow operations down. They frustrate teams. And eventually someone asks the inevitable question: can AI just do this for us?

And in many cases, the answer is yes.

But that’s where Frankenstein AI starts forming.

Instead of one cohesive system, these companies end up hiring different agencies and developers to solve different problems. One group builds a custom AI-powered supply chain tool. Another builds something for marketing. Another handles finance or accounts payable. Each system works fine on its own. Each team optimizes for its own scope. Each vendor chooses their own architecture, their own LLM, their own agent framework.

Then someone says, “We’ll just stitch it all together with APIs.”

That’s when reality shows up.

APIs change. Models change. Pricing changes. Vendors roll off. Foundational models get updated underneath you. Suddenly data doesn’t reconcile between systems. Finance blames operations. Operations blames the API layer. The API team blames the AI agent. The AI vendor blames the underlying model.

And nobody owns the whole thing. That’s Frankenstein AI fully alive.

What makes this especially dangerous is that it looks cheaper than enterprise software—until you zoom out over time. Multiple LLM licenses. Multiple agent layers. Multiple vendors. Multiple maintenance contracts. More fragility, not less. More cost, not less. You didn’t avoid enterprise complexity. You recreated it without governance.

If I put myself in the shoes of a CTO hired into a $300–$500 million business, the first thing I would do is act like a Fortune 500 company—even if I couldn’t spend like one.

The single most important decision is picking a foundational LLM. Explicitly. At the executive level. Not by committee. Not by accident. Making it clear that any enterprise application built inside the company must sit on top of that foundation.

That doesn’t mean buying licenses for every employee. Most mid-market companies can’t afford that. But it does mean enforcing a standard for systems, agents, and enterprise workflows.

The second thing I’d do is shut down rogue AI usage immediately. This is where Frankenstein AI quietly sneaks in. Employees using personal AI accounts to upload internal documents. Departments experimenting without telling IT. Helpful tools that no one tracks. Even if the data isn’t used for training, you still don’t want your internal documents floating around outside your control.

And finally, I’d make it clear that any vendor building anything with AI must go through IT approval, follow defined protocols, and build on the company’s chosen foundation. Even if your internal team can’t build everything themselves, they still have to own the architecture.

That’s how you prevent abandonment.

I think 2026 is going to be full of stories about Frankenstein AI going out of control. Systems making bad decisions. Data drifting. Costs exploding. Companies realizing too late that no one actually understands the AI machinery running critical parts of their business.

The smart ones will get ahead of this now. Before the monster is fully assembled. Before it becomes business-critical. Before it breaks something they can’t easily fix.

Because Frankenstein AI doesn’t kill companies overnight. It slowly erodes trust, reliability, and control. And by the time you realize what you’ve built, it’s already too late.